The concept of average IQ in the United States is often discussed in education, psychology, and social research. However, IQ scores are frequently misunderstood or oversimplified. To interpret them correctly, it is important to understand what IQ actually measures, what it does not measure, and how averages should be used responsibly.

This article provides a factual, neutral overview of the average IQ in the United States, while emphasizing the limitations of IQ testing and avoiding harmful conclusions.

What Is IQ?

IQ, or Intelligence Quotient, is a standardized score used in psychology to assess certain types of cognitive abilities. These abilities typically include:

- Logical and abstract reasoning

- Pattern recognition and problem-solving

- Verbal comprehension and language skills

- Working memory and mental processing

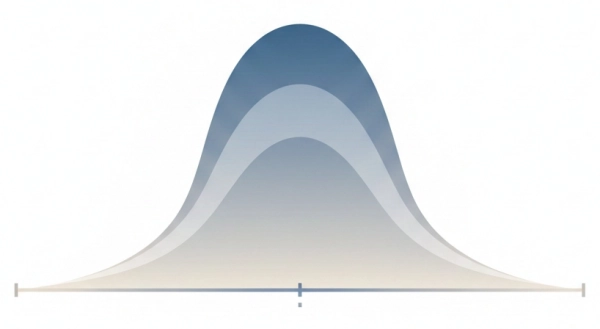

Modern IQ tests are carefully designed so that 100 represents the average score for a specific population and age group. Most people score close to this average, with fewer individuals at the higher and lower ends of the distribution. You can explore how these scores are structured in more detail in the IQ scale explained from low to genius.

It is important to understand that IQ is a relative and statistical measurement, not a direct measure of intelligence in a broad or complete sense. IQ tests do not evaluate creativity, emotional depth, or social skills—areas more closely related to emotional intelligence (EQ) and real-world success.

What Is the Average IQ in the United States?

According to standardized norms used by major IQ test publishers and psychological assessments, the average IQ in the United States typically falls between 98 and 100. This aligns with data discussed in average IQ in the United States and broader comparisons such as IQ by country.

This range does not suggest that Americans are becoming more or less intelligent over time. Instead, it reflects how IQ tests are continually updated and recalibrated to keep the population average near 100.

An individual with an average IQ score performs similarly to most people in their age group on the specific mental tasks measured by the test. This indicates typical cognitive functioning rather than exceptional strength or weakness.

Why the Average Is Around 100

IQ tests are norm-referenced, meaning they are designed to compare individuals against a representative sample of the population rather than against a fixed standard.

Over time, test developers:

- Update test questions

- Adjust scoring scales

- Renorm tests using new population samples

This ongoing process ensures that the average score remains close to 100. It also relates to broader phenomena such as the Flynn Effect, which explains why raw cognitive performance can change over generations while test averages remain stable.

Because of this design:

- The national average does not indicate absolute intelligence levels

- Small differences between studies are expected

- Averages reflect test calibration, not innate ability

Factors That Influence IQ Scores

Average IQ scores in the United States, as in any country, are influenced by multiple environmental and social factors, many of which are discussed in factors affecting IQ test results. These include:

- Access to quality education

- Childhood nutrition and healthcare

- Exposure to reading and learning opportunities

- Familiarity with standardized testing

- Language and cultural context

- Socioeconomic conditions

These factors affect test performance, not inherent intelligence. This distinction is critical when interpreting data about IQ and academic achievement or school outcomes.

What an Average IQ Does—and Does Not—Mean

An average IQ score generally indicates:

- Typical performance on standardized cognitive tasks

- Normal ability to learn new information

- Adequate reasoning and problem-solving skills

- Comparable cognitive functioning to most peers

However, an average IQ score does not measure many important human abilities, including:

- Creativity and original thinking (IQ vs creativity).

- Emotional intelligence (EQ)

- Social awareness and communication skills

- Motivation, persistence, or discipline

- Artistic, musical, mechanical, or athletic talent

Many careers and life paths depend far more on EQ, adaptability, and effort than on IQ alone, as explored in jobs where EQ matters more than IQ.

IQ and Individual Differences

While national averages are useful for research and educational planning, they do not describe individuals. People with similar IQ scores can differ dramatically in their abilities, interests, and life outcomes.

Two individuals with the same IQ may vary in:

- Career success

- Academic strengths

- Problem-solving styles

- Emotional intelligence and leadership ability

This is why experts caution against labeling people based solely on scores. Even individuals concerned about being “not naturally smart” can succeed through skills, habits, and learning strategies, as discussed in not naturally smart.

Is the Average IQ in the United States “Good” or “Bad”?

From a scientific standpoint, this question is misleading. An average IQ is neither positive nor negative—it is simply a reference point used for comparison.

Human development and success depend far more on:

- Education quality

- Access to opportunities

- Physical and mental health

- Personal effort and persistence

- Social and emotional skills

IQ is only one small piece of a much larger picture.

Responsible Use of IQ Statistics

When discussing average IQ figures, responsible interpretation is essential. This includes:

- Avoiding value judgments about populations

- Avoiding comparisons that rank people as superior or inferior

- Recognizing the limits of online and standardized tests, including free online IQ tests.

- Emphasizing education, opportunity and context.

Using IQ data responsibly helps promote understanding rather than misinformation or stigma.

The Bottom Line

The average IQ in the United States is approximately 98–100, reflecting how standardized intelligence tests are designed and calibrated. This number represents typical performance on specific cognitive tasks—not overall intelligence, character or human potential.

IQ averages can be useful for research and educational planning, but they should never be used to judge individuals or groups. Intelligence is complex, multifaceted and shaped by environment, experience and personal growth.

Understanding IQ responsibly allows for informed discussion—without stereotypes, discrimination or harm.

Comments

Share Your Thoughts